English | 中文

Whisper_auto2lrc is a tool that uses the whisper model and a Python program to convert all audio files in a folder (and its subfolders) into .lrc subtitle files. If an lrc subtitle file already exists, it will be automatically skipped.

It uses the whisper speech-to-text model released by OpenAI. Users can specify the audio file folder to be processed, and the program will automatically recursively search for all audio files in the folder and transcribe them into lrc format subtitle files.

It is suitable for users who need to transcribe a large number of audio files into subtitles.

First, install Python, which has been tested on Python 3.11

Then, install the Python dependencies required by whisper openai/whisper:

pip install git+https://github.com/openai/whisper.git Pull this repo and install the Python dependencies:

git clone https://github.com/bai0012/Whisper_auto2lrc

cd Whisper_auto2lrcpip install -r requirements.txt Download FFmpeg and add the folder containing ffmpeg.exe to the system variables. For example, BtbN/FFmpeg-Builds.

With the terminal at the base of the project folder, run

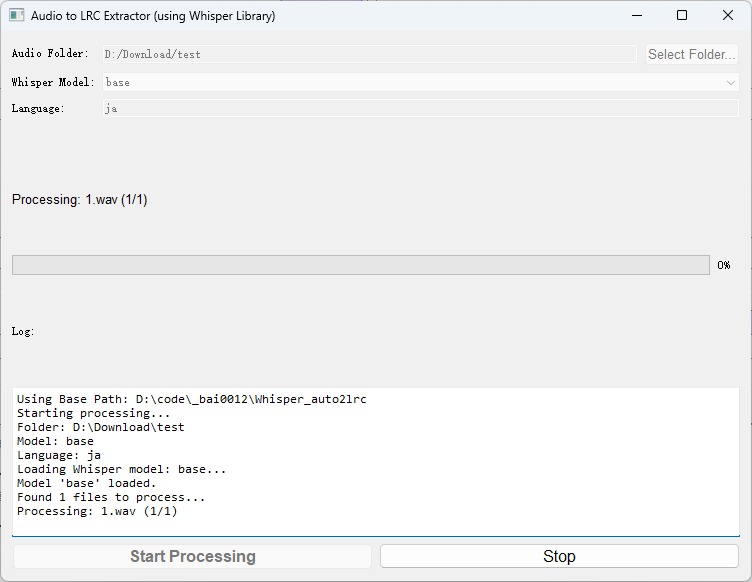

python main.pyIn the window, select the folder path, choose the model size to use, enter the language of the audio files to be processed, and click start.

Whisper can call GPUs that support CUDA. If you are sure that your GPU has been correctly installed and supports CUDA, try the following steps:

pip uninstall torchpip cache purgeThen install the latest PyTorch using the command on the PyTorch

For example:

pip3 install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu126You should be able to call the graphics card normally.

You can try the following steps to reinstall ffmpeg:

pip uninstall ffmpegpip uninstall ffmpeg-pythonpip install ffmpeg-pythonRefer to openai/whisper

| Size | Parameters | English-only model | Multilingual model | Required VRAM | Relative speed |

|---|---|---|---|---|---|

| tiny | 39 M | tiny.en |

tiny |

~1 GB | ~10x |

| base | 74 M | base.en |

base |

~1 GB | ~7x |

| small | 244 M | small.en |

small |

~2 GB | ~4x |

| medium | 769 M | medium.en |

medium |

~5 GB | ~2x |

| large | 1550 M | N/A | large |

~10 GB | 1x |

| turbo | 809 M | N/A | turbo |

~6 GB | ~8x |

Choose the model based on the size of the VRAM on your GPU. Generally, the larger the model, the faster the speed and the lower the error rate.

(For example, if you have an RTX3060 12G, you can choose the large model, but if you have a GTX 1050ti 4G, you can only use the small model)

Faster Whisper uses the same models but requires less VRAM, so try it out for yourself.

(Faster Whisper can even run the large model on a 1050ti 4G, although it sometimes runs out of VRAM, so a 6G VRAM should be enough to run the large model)

Whisper supports speech-to-text in multiple languages, commonly used ones include:

| Language | Code |

|---|---|

| Chinese | zh |

| English | en |

| Russian | ru |

| Japanese | ja |

| Korean | ko |

| German | de |

| Italian | it |

For more languages, refer to: whisper/tokenizer.py

This program was completed under the guidance of GPT-4, with special thanks to chshkhr for their contributions.