-

Notifications

You must be signed in to change notification settings - Fork 3.5k

Description

Is your feature request related to a problem? Please describe.

It's seemingly quite common for models to use float16, and the web runtimes don't support that data type. It's possible to convert float16 values to float32 values, and this may work fine for most models, but this still results in .onnx files that are twice as big as they need to be.

I'm not sure how the tensor datatypes map to actual wasm/js datatypes, but even if float16 were in some sense "emulated", that would be useful in reducing the .onnx file sizes, even if it has no impact on performance. It would also make the web runtime compatible with more models so that it works "out of the box", instead of requiring (what was for me, as a noob) a tedious process of converting float16 to float32.

I haven't tested it, but I think TF.js can do a sort of "rehydration" of float16 values to float32 after downloading (at least, it seems like it going by this pull request that I came across - though it's aimed at post-training quantization). If that is indeed the case, then perhaps their implementation/choices would be a useful reference.

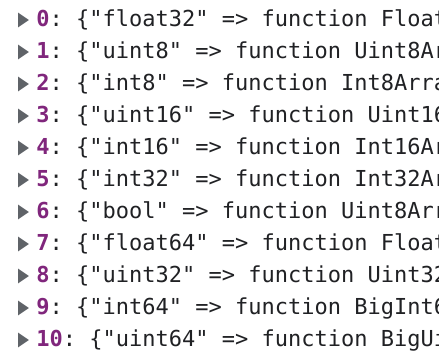

Here are the currently-supported data types for the wasm runtime:

System information

- ONNX Runtime version (you are using): https://cdn.jsdelivr.net/npm/[email protected]/dist/ort.js

Thanks!